- Social Sciences & Humanities Open Cloud

Working with interview data: SSHOC workshop on a multidisciplinary approach to the use of technology in research

Date:

08 November 2019

Do you work with interviews as research material? Are you tired of transcribing and processing audio and resulting transcriptions by hand? If so, a group of oral historians, language researchers and technical experts might have just what you need. They have set up the Oral History & Technology taskforce to help researchers deal with their audio data.

DISCOVERING THE POTENTIAL OF AUDIO MATERIAL

At the Digital Humanities 2019 conference in Utrecht in July, this group collaborated with SSHOC and CLARIN ERIC to organise a workshop demonstrating how audio material can be digitised, converted into text by automatic speech recognition and be further processed with NLP and annotation tools.

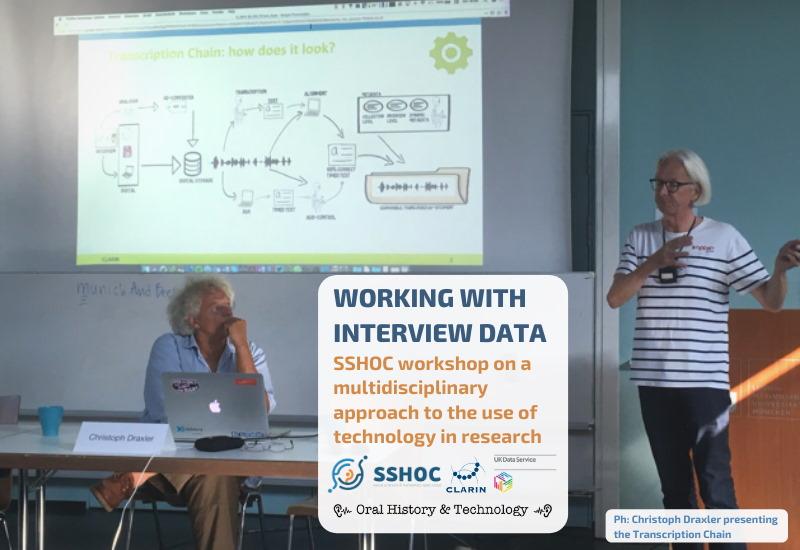

This was the first SSHOC training workshop, addressing one of the projects’ main goals: providing researchers within the Social Sciences and Humanities with common web-based tools that can assist them in their research throughout the data lifecycle. The focus of this workshop was on the data creation phase for the audio material. Three out of five lecturers were part of the Oral History & Technology research group – Christoph Draxler (Ludwig-Maximilians-Universität München), Stef Scagliola (C2DH, University of Luxemburg), Louise Corti (UK Data Archive), whereas the other two – Jeannine Beeken (UK Data Archive) and Khiet Truong (University of Twente) – are their external colleagues.

NEW DEVELOPMENTS IN THE PROCESSING OF AUDIO MATERIAL

The workshop brought people from a wide range of disciplines — (oral) historians, linguists, language and speech technology experts, but also psycholinguists, mental health experts and social signal processing scholars — together to learn about and discuss the latest developments of the Transcription chain (T-Chain) in which various open source tools are combined to support transcription and alignment of oral and written text in different languages. New NLP tools to process the resulting texts were also presented.

The workshop started with a short introduction by Louise Corti and Stef Scagliola on Digital Humanities approaches to interview data, which centered around the question whether historians, linguists and social scientists can share tools. This was followed by a more technical session by Christoph Draxler who described the preparation of audio data, and discussed automatic speech recognition (ASR), ASR results correction and possible steps for improved readability. He demonstrated the OH-Portal (v1.0.2), powerful and easy-to-use prototype of the T-Chain, and also made recommendations for a successful use of the portal:

- There is no free lunch. You will have to proper work to obtain good results.

- Split long interviews in meaningful chunks of 5-15 minutes.

- With good audio quality and a good recogniser, you can transcribe more quickly with ASR and manual correction than through only manual transcription.

- The full Transcription Chain from upload to word alignment is in operation.

- Users have a 24-hour window to download files before the OH portal removes the files.

- There is also a conversion tool for various audio formats at the Bavarian Archive for Speech Signal called AudioEnhance.

Photo: The OH-Portal

TEXT ANALYSIS

The second part of the workshop was dedicated to the analysis of the materials. First, Jeannine Beeken (UK Data Archive) addressed linguistic analysis of data, presenting some free tools, such as a keyword extractor and a summarisation tool, and offering a short hands-on activity on her demo corpus using the SketchEngine. She pointed to key challenges for linguistic tools analysing human communication such as disambiguation by tokenizers, grouping by stemmers, controlling by stopwords, and elaborated on the challenges with regard to punctuation marks. These are invaluable for the interpretation of the text on several levels, but unfortunately, a standard speech recogniser does not output punctuation marks. She offered an example:

The panda eats shoots and leaves (a reference to the panda’s food) vs. The panda eats, shoots and leaves (a killer panda!)

Afterwards, Khiet Truong (University of Twente), who presented remotely, spoke about emotion extraction. She showed the simple picture below and asked participants to decide whether the couple were singing, arguing or laughing.

By only reading oral history, she said, we may miss important emotions underpinning the conversation. Studying social-sign processing opens up the opportunity to re-interpret an interview (for example by reflecting on the function of the silence, etc.). She also showed her work on tools for automatic emotion recognition directly from the speech signal.

INTENSIVE, VALUABLE AND INSPIRING

The workshop concluded with a fruitful discussion. Participants were curious for example about the quality of ASR for regional or dialectal forms of speech and data protection after upload to the server. There were also requests for clear guidelines on how to use the tools, for recognisers that can cope with multilingual conversations/data and for easy to read text output formats that show the contributions of the various speakers.

DO YOU WANT TO SEE FOR YOURSELF?

Interviews are carried out by researchers in many disciplines and the data generated from these interviews allows rich knowledge transfers. Given that the T-Chain meets the needs of many researchers across disciplines, the Oral History & Technology research group plans to hold further workshops in the near future. In addition, the SSHOC community will be able to benefit from a webinar on the use of the T-Chain planned in the first half of 2020. If you want to be alerted to SSHOC events and developments, sign up to the newsletter here.

LINKS TO WORKSHOP MATERIALS

- Workshop programme

- Workshop slides

- Short paper about the Transcription Chain

- Multilingual videoclips with speech technologist Henk van den Heuvel, linguist Silvia Calamai, and data curator Louise Corti on the potential of speech recognition and the importance of research in the field of oral history.

The blog post was written by Henk van den Heuvel (CLST, Radboud University), Stef Scagliola (University of Luxemburg) and Louise Corti (UK Data Archive), and edited by Kristina Pahor de Maiti (University of Ljubljana).

Cover Photo: Christoph Draxler presenting the Transcription Chain.